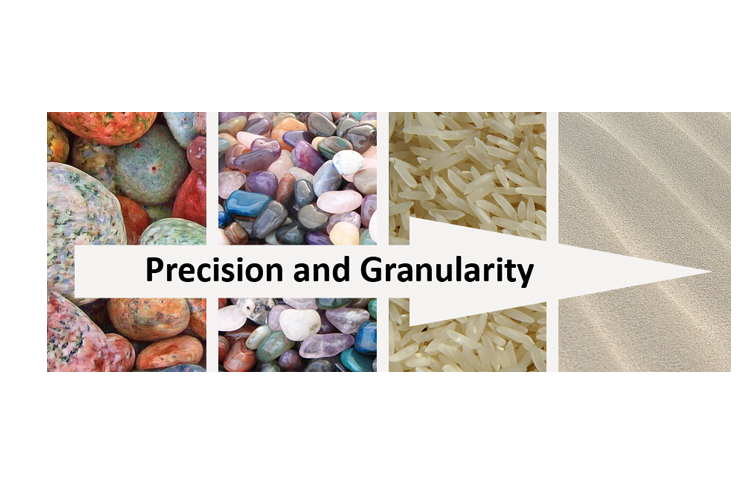

While teaching several classes in Brisbane, Australia recently, I discussed when it is best to start thinking about each of the Conformed Dimensions of Data Quality (CDDQ) within the context of a typical waterfall Software Development Lifecycle (SDLC). We will not cover each dimension per phase here in this blog, but I thought I'd just cover Precision as an example and provide my thoughts on the other dimensions relating to each of the phases as a separate document.

Click here to access a spreadsheet with each of the Underlying Concepts of the Conformed Dimensions (release 4.3) grouped by the SDLC phase in which you should start considering them.

In terms of Precision, I'll expand on the three underlying concepts within the Precision dimension in order to share the value of this approach. These are each defined here: Precision of Data Value, Granularity, and Domain Precision.

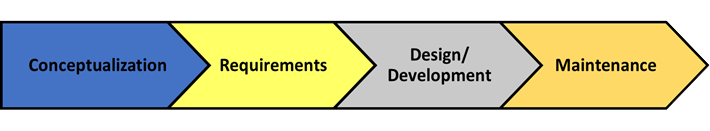

The challenge with precision is that if you don't record the data at the level of detail required for analysis, it's nearly impossible to reverse engineer that data to get to a more granular level. For instance, if you haven't already collected the hour of the day in which purchases were made, your ability to identify behavioral aspects relating to when the product was purchased would be significantly limited. For instance, below, if we have only collected the date of purchase, we can only derive the Day of Week (slightly more granular) retrospectively. Using this, I can identify trends in increased/decreased purchases (say on Friday/Saturday/Sunday, in other words on weekends). See example data below.

| Transaction ID | Date (YYYY-MM-DD) | Day of Week (Derived Later) | Line Item | Ice-cream flavor |

| 199374 | 2018-07-19 | Thursday | 1 | Fudge ripple ice cream |

| 199374 | 2018-07-19 | Thursday | 2 | Rocky road ice cream |

| 199375 | 2018-07-21 | Saturday | 1 | Lemon custard ice cream |

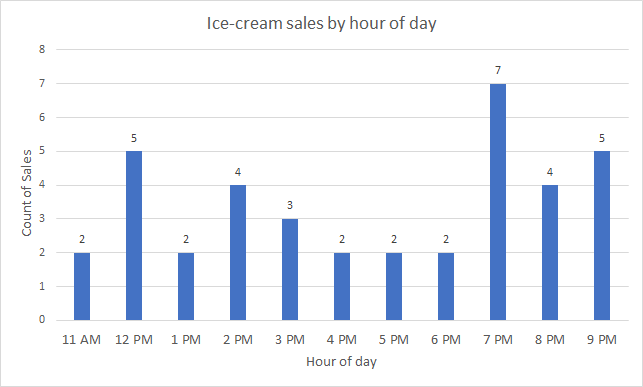

But would you be able to ensure that the right number of staff were working when you sold the ice cream? For instance, how many should you hire during the noon lunch hour or late Friday-Sunday nights? Would you know when you had fewer sales than staff bandwidth, meaning you should offer promotions during those times? Unfortunately, the answer to both questions, is no. If you however, collected hour of day via your point of sale system, then you would have the ability to group sales by hour of the day. Consider the following data set as an example and the associated chart you could make.

| Transaction ID | Date (YYYY-MM-DD) plus Time | Day of Week | Line Item | Ice-cream flavor |

| 199374 | 2018-07-19 14:27 | Thursday | 1 | Fudge ripple ice cream |

| 199374 | 2018-07-09 11:33 | Monday | 2 | Rocky road ice cream |

| 199375 | 2018-07-21 11:21 | Saturday | 1 | Lemon custard ice cream |

Would you like to analyze this data yourself? Click here to access the CSV file of this data in order to profile it yourself. If you'd like a copy of the Ice-cream flavor reference data as well, use this CSV file.

Above, we've just illustrated the concept of Domain Precision, which identifies the granularity we store data in each column. Note that there are variants based on data-types. We just described this using the date data-type, but we could have defined continuous numeric (Precision of Data Value), or categorical data types as well. Here are a few examples of each data type.

| Data Type | Example |

| Numeric Continuous | Money, Temperature, Speed…etc |

| Categorical | Ice cream flavors, Geographic States, Cost Centers…etc |

| Date | Year, Year-month, Year-month-day…etc |

Now, going back to where we started this discussion. If you don't know what type of analysis you plan to do with the data, collection at the right level of granularity (table grain), or domain precision (attribute grain), can be challenging. My recommendation is to store it at the most granular level possible and document that via robust descriptions (Metadata Availability). This is an Oldy but Goody cited both by Martin Zinkevich's (Google) Rules of Machine Learning, Rule #2.2 and 2.3 and Ralph Kimball's Rule #1: Load detailed atomic data into dimensional structures (by Margy Ross).

In summary, here's the way to think about Precision. We must ensure that data is modeled and stored at the right level of specificity in order to ensure we represent the real-world concept in the most natural form. To do this we use the term Granularity to describe how specific the row is and we use Domain Precision to describe how specific the value is within a column. In the two table examples provided above, the granularity is one row per transaction line item detail (aka: composite key between the Transaction ID and Line Item). In the first table, we defined the Domain Precision for the Date Column at the day level, and in the second table we defined it at the day-hour level of detail (technically it is the day-hour-minute but we didn't use the minute detail).